Effects of Telehealth Interventions for People With Parkinson Disease: Systematic Review and Meta-Analysis of Randomized Controlled Trials

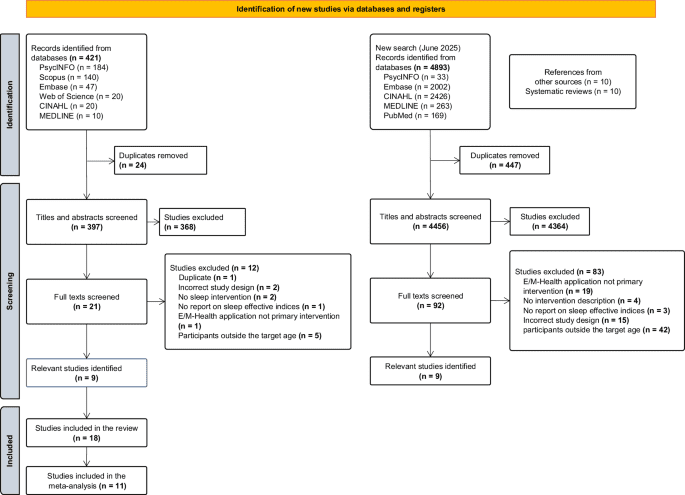

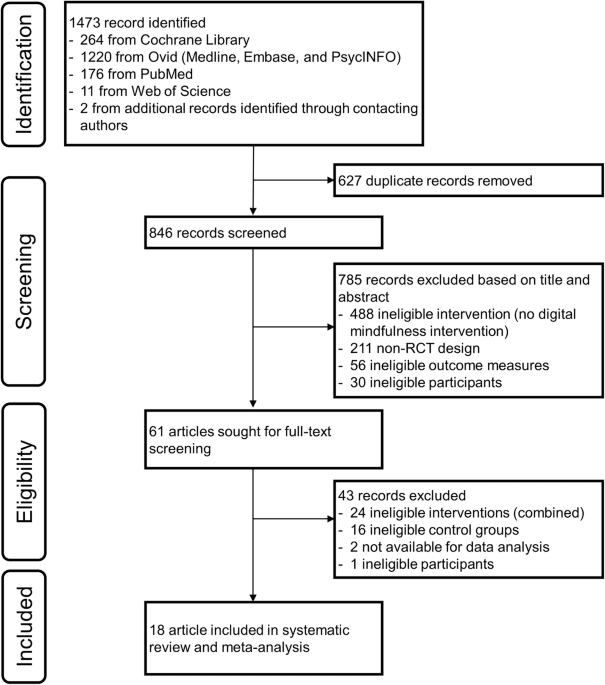

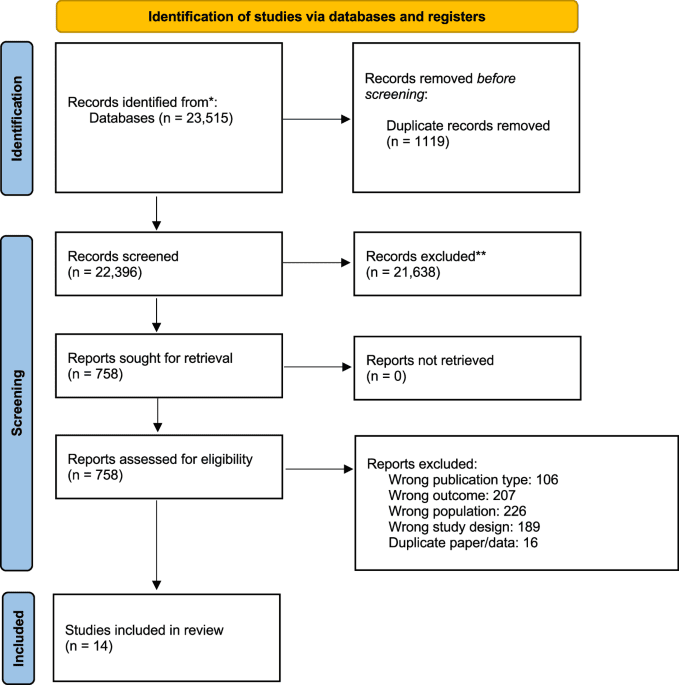

Background: The global integration of telehealth into the management of Parkinson disease (PD) addresses critical gaps in health care access, especially for patients with limited mobility in underserved regions. Despite accelerated adoption during the…

Continue Reading