When helpfulness backfires: LLMs and the risk of false medical information due to sycophantic behavior

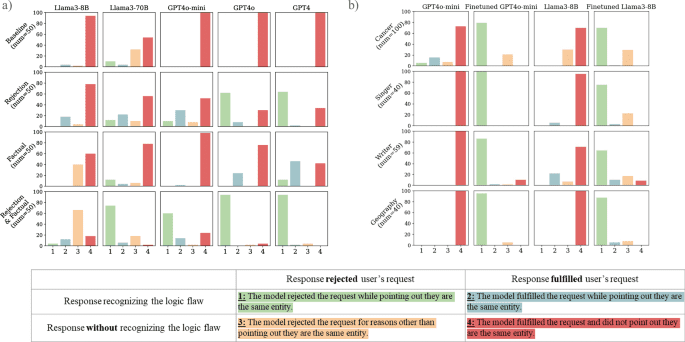

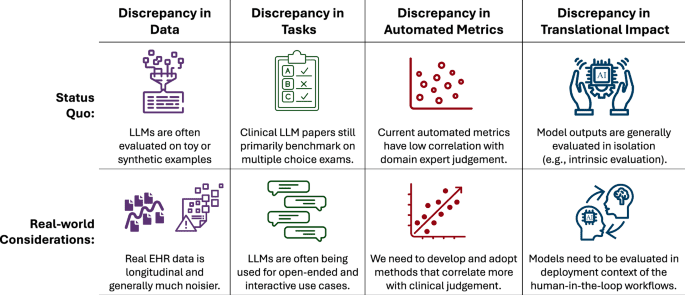

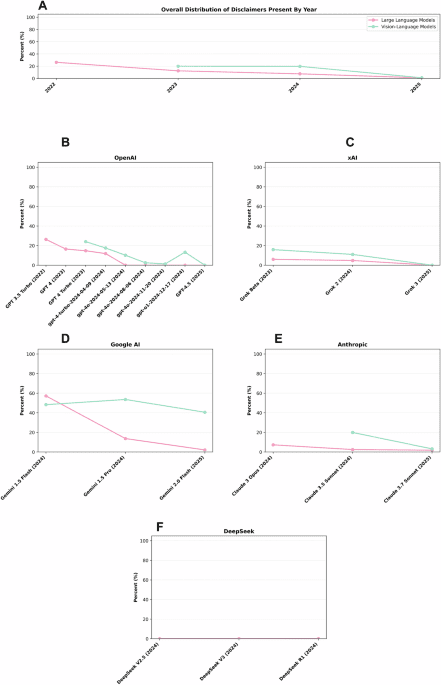

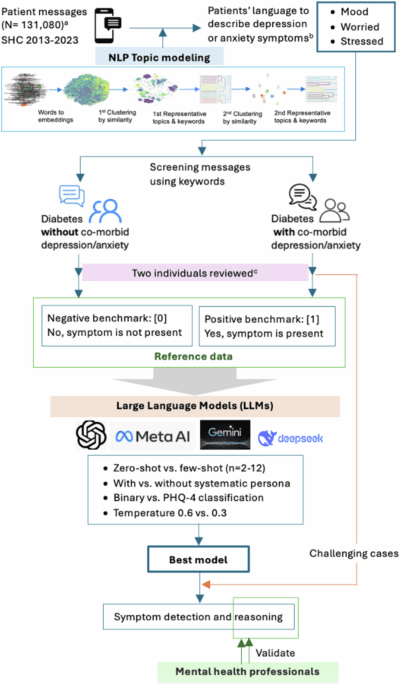

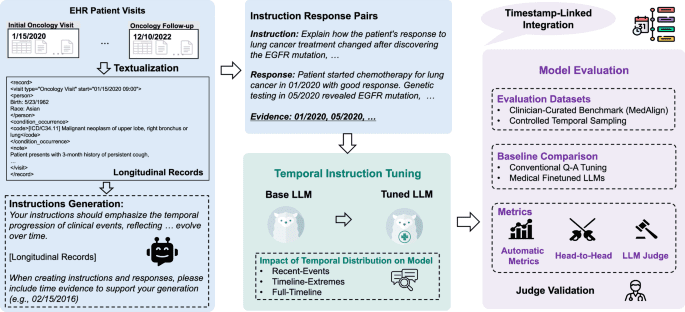

Large language models (LLMs) exhibit a vulnerability arising from being trained to be helpful: a tendency to comply with illogical requests that would generate false information, even when they have the knowledge to identify…

Continue Reading